How to Create a Robots txt File for Webflow

Learn how to create a robots txt file to control search engine crawlers and improve your Webflow SEO. This guide covers syntax, examples, and setup.

Creating a robots.txt file is surprisingly simple. All you need is a plain text editor to write out your directives, like User-agent: * and Disallow: /private/. Once you've saved it as robots.txt, you just upload it to your website's root directory. That tiny file is the first thing search engine crawlers look for when they visit your site.

Why Your Robots txt File Is a Crucial SEO Tool

Before we jump into the nitty-gritty of creating one, it's worth understanding why this little text file packs such a punch. Your robots.txt is way more than just a technical tick-box; it's a cornerstone of any decent SEO strategy. Think of it as a friendly gatekeeper for search engine bots, giving them clear instructions on how to crawl your Webflow site efficiently and without wasting their time.

For any business trying to make an impact online, managing how bots crawl your site is non-negotiable. A well-crafted file makes sure your most important, conversion-focused pages get the attention they deserve from Google.

Maximising Your Crawl Budget

Every website gets an allocated "crawl budget"—basically, the amount of time and resources search engines like Google will spend crawling your pages. A robots.txt file is your key to making the most of it. By blocking off unimportant pages, like internal search results or admin login areas, you tell crawlers to focus on what actually matters.

This strategic guidance stops crawlers from getting bogged down on low-value URLs. Instead, it points them straight to your core service pages, blog posts, and product listings, which is absolutely vital for getting indexed faster and boosting your ranking potential.

In the UK’s hyper-connected market, this becomes even more important. With an internet penetration rate hitting 97.8% at the start of 2025, the question isn't if search engines will find you, but how they'll understand your site's structure. For a Webflow site built to bring in business, a single misplaced Disallow rule could hide your most important landing pages from Google, completely derailing your hard work.

Getting your robots.txt right is one of the most fundamental SEO optimization best practices for a reason. This control is a key part of an effective Webflow SEO optimisation strategy, ensuring your technical setup is working for you, not against you.

Understanding The Core Robots txt Directives

Getting the syntax right in your robots.txt file is everything. One tiny typo can accidentally hide your entire website from Google, and all that hard SEO work goes down the drain. This file speaks to web crawlers using a simple language built on a handful of core commands, known as directives.

Think of each directive as a specific, non-negotiable instruction for the bots. Let's walk through the main ones you'll be using.

User-agent: The Who

First up is User-agent. This directive always kicks off a new set of rules and points to the specific web crawler you're talking to. You can get really specific and target bots like Googlebot or Bingbot by name, or you can just use a wildcard (*) to give instructions to every bot out there.

For most sites, starting with a universal rule is the easiest way to go:

User-agent: *

This line is basically you shouting, "Hey, all search engine bots, listen up! The following rules are for you."

Disallow: The What Not To Do

Next, we have Disallow. This is the workhorse of your robots.txt file, telling crawlers which directories or files they should not crawl. It's perfect for keeping things like internal admin folders, thank-you pages, or asset directories out of the search results.

For instance, to block a folder named /admin/, your rule would be dead simple:

Disallow: /admin/

Just remember that paths are case-sensitive. Disallow: /Admin/ would be a completely different rule. Getting these details right is crucial for solid Webflow technical SEO, as a small mistake can have big, unintended consequences. (You can find more on that here: https://www.derrick.dk/integration-faqs/webflow-technical-seo)

Allow: The Exception To The Rule

So what if you want to block an entire directory but let a crawler access just one specific file inside it? That's where the Allow directive comes in. It's designed to override a Disallow rule.

Let’s say you’ve blocked your /assets/ folder, but you need Googlebot to see a critical CSS file in there. The rules would look like this:

User-agent: GooglebotDisallow: /assets/Allow: /assets/style.css

This tells Googlebot to ignore everything in /assets/ except for that one style.css file. It's a fantastic tool for fine-tuning crawler access with surgical precision.

To make things even clearer, here's a quick reference table for the most common directives you'll encounter.

Common Robots txt Directives Explained

This little cheat sheet covers the fundamentals for most websites.

A common misconception is that you need to add

Allow: /to give crawlers full access. That's not how it works. By default, crawlers assume they can access everything. You only need to use theAllowdirective to create specific exceptions to yourDisallowrules.

It's also worth keeping an eye on how bot management is evolving. New standards are always popping up, like the new llms.txt file, which aims to give site owners control over how AI models use their content.

Once you get a handle on these commands, you'll feel much more confident when you create a robots.txt file. You'll be able to guide search engines exactly where you want them to go—and keep them out of where they don't belong.

Getting Your Webflow Robots.txt Right

Alright, now that we’ve covered the theory, let's get our hands dirty and build a robots.txt file specifically for a Webflow project. We'll start with a clean, basic setup and then add a few specific rules for common situations you’ll definitely run into.

Webflow is a fantastic platform, but like any system, it has its own set of utility pages and file structures that are best kept away from search engine crawlers. A well-configured file makes sure Google and other bots focus only on the pages you've spent hours designing for your audience.

Starting with a Clean Slate

The simplest and most effective starting point for pretty much any website is a robots.txt file that gives all crawlers full access. This is technically the default behaviour even if you don't have a file, but creating one explicitly lays the groundwork for any custom rules you'll want to add later.

Here’s the most basic template you'll need:

User-agent: *Disallow:

Sitemap: https://www.yourwebsite.co.uk/sitemap.xml

This little snippet does two very important things:

User-agent: *: This line is a catch-all, speaking to every search engine bot that comes knocking.Disallow:: By leaving this blank, you're essentially saying, "Come on in, nothing is off-limits."Sitemap:: This is crucial. It points crawlers directly to your XML sitemap, which is the definitive map of all the URLs you want them to find and index. Just be sure to swap out the placeholder with your actual sitemap URL.

This is your foundation. From here, we can start adding specific rules to guide the bots more precisely.

Blocking Common Webflow Utility Pages

Webflow automatically generates certain pages for its own internal functions, like the password-protected page template. These pages have zero value in search results and can just clutter up your indexing reports if they get picked up.

You can proactively tell bots to ignore these by adding a couple of lines:

Block Webflow's default utility pages

Disallow: /_utility/Disallow: /search

The /_utility/ path takes care of the standard password page, and /search blocks your internal site search results page. Blocking the search page is a good move because it can generate tons of low-value, duplicate-content-style pages that you don't want indexed. It's a simple fix that keeps things tidy.

Google's own documentation has a great little diagram that shows how this works.

As you can see, the crawler always checks for robots.txt first before it even tries to crawl a page, which shows just how important this file is as your site's first line of communication with bots.

Fencing Off Staging and Dev Areas

Most of us use a staging subdomain or a specific directory to test changes before they go live. The last thing you want is for Google to start indexing your half-finished pages, broken layouts, or test environments. It just looks unprofessional.

If your staging area is inside a subdirectory, like /staging/, blocking it is dead simple.

Block the staging environment from being indexed

Disallow: /staging/

This one line tells crawlers not to venture into your test zone, ensuring only the polished, final version of your site makes it into search results. Forgetting this is a surprisingly common mistake, and it can lead to some very confusing results for users who stumble upon your test pages.

Pro Tip: While

robots.txtis great for asking crawlers to stay away, it’s not a security tool. For truly sensitive directories, you should always use password protection on your server. ADisallowrule is just a polite request; it doesn't actually stop a determined bot or bad actor.

Don't Accidentally Block CSS and JavaScript

Modern websites are built on CSS and JavaScript. They control how everything looks and functions. If you accidentally block these resources, Googlebot might not be able to "see" your page the way a user does. This is what's known as a rendering issue, and it can absolutely tank your rankings.

For this reason, you should almost never block your asset folders. The good news is that our starting template—the one with the empty Disallow rule—already takes care of this by allowing access to everything by default. No extra work is needed.

So, putting it all together, a solid, dependable robots.txt for a typical Webflow business site might look something like this:

User-agent: *

Block Webflow utility pages and internal search

Disallow: /_utility/Disallow: /search

Block a temporary staging directory if you use one

Disallow: /staging/

Sitemap: https://www.yourwebsite.co.uk/sitemap.xml

This file is simple, clean, and gets the job done. It gives crawlers clear directions, points them to your most important content with the sitemap, and keeps them out of the areas that offer no SEO value. You're now ready to create a robots.txt file that actually helps your site grow.

Right, you've hammered out the perfect set of rules for your robots.txt file. Now for the most important bit: getting it live on your Webflow site and making sure it actually works. This isn't your typical file upload; it’s all about using Webflow’s built-in tools the right way.

Webflow handles the robots.txt file a little differently from traditional hosting. You won't be firing up an FTP client to upload a text file. Instead, you'll add the content directly into your project settings, which makes managing it dead simple once you know where to look.

Adding Your Code in Webflow

First up, head to your Webflow Project Settings. From there, click into the Publishing tab. Scroll down a bit, and you'll find a section specifically for your robots.txt file—it's just a simple text box waiting for your directives.

This is where you'll paste the entire contents of the file you’ve put together.

- Copy all your rules, starting with

User-agent: *. - Paste everything straight into the robots.txt field.

- Hit Save Changes, and then publish your site to push the changes live.

And that's it. Your robots.txt is now active and can be found at yourdomain.co.uk/robots.txt.

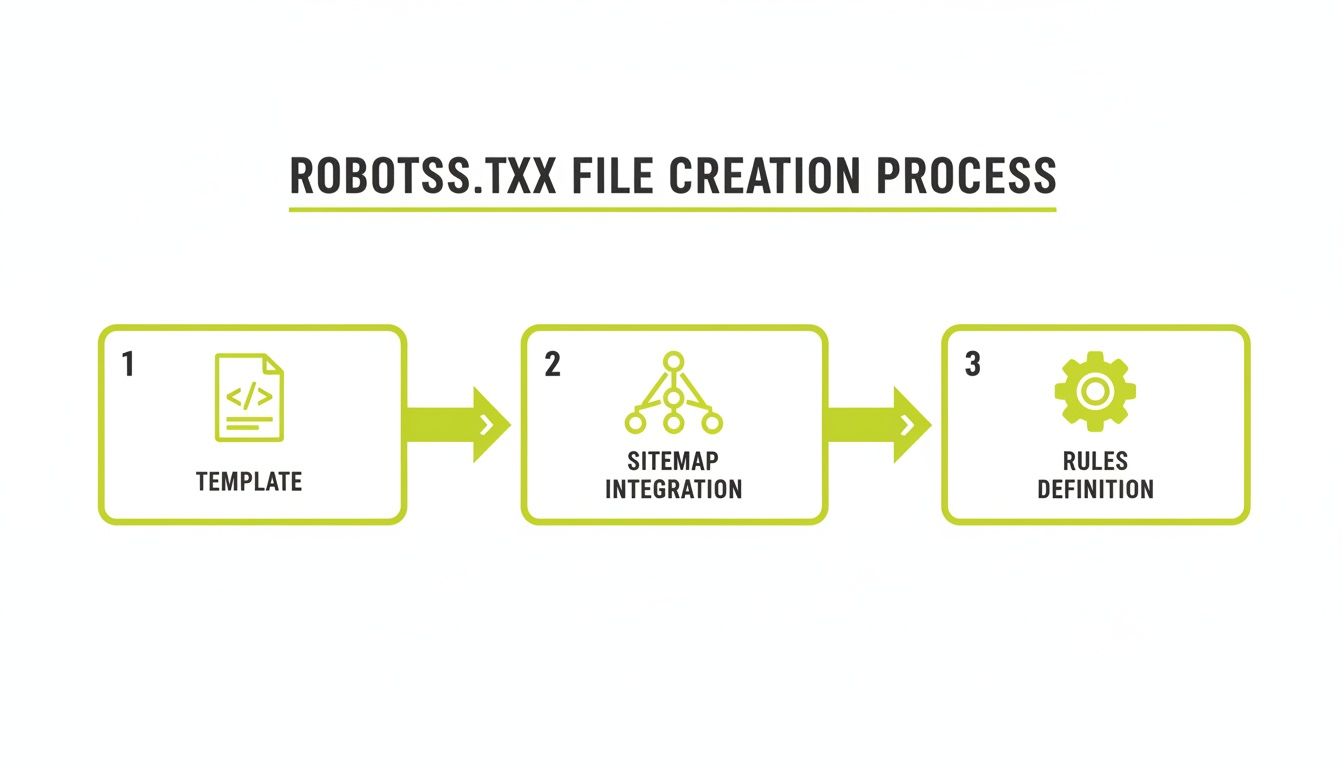

This quick graphic breaks down the essential steps for building an effective robots.txt before you even get to Webflow.

Moving from a basic template to defining specific rules and slotting in your sitemap is a foundational process for any solid implementation.

Validating Your File with Google Search Console

Once your file is live, you absolutely must test it. Don't ever just assume it's working. A single typo can have catastrophic consequences, like accidentally blocking your entire site from Google. Luckily, Google Search Console has the perfect tool for the job.

The Robots.txt Tester is a free tool inside Google Search Console that scans your file for syntax errors and logical mistakes. It’ll flag any issues it finds and tell you exactly which line is causing the trouble.

Think of the tester as a critical safety net. It’s like proofreading a vital email before hitting send. This one step can save you from massive SEO headaches down the road.

To use it, just open the tool for your verified property. It automatically pulls in your live robots.txt file and highlights any errors on the spot. This immediate feedback is invaluable, especially when you’re wrestling with complex rules. It's a non-negotiable step in any technical audit, much like the small but crucial checks you'd find in a detailed website migration checklist.

Testing Specific URLs

The real magic of the Robots.txt Tester is its ability to check individual URLs against your rules. This feature lets you confirm whether a specific page is allowed or blocked for any given user-agent.

For instance, you could plug in the URL of a key landing page and test it against Googlebot. The tool will instantly spit back either an "Allowed" or "Blocked" status and show you the exact directive responsible for that decision.

This is incredibly handy for troubleshooting. If a page isn't getting indexed, a quick check here can tell you if an overly aggressive Disallow rule is the culprit. By testing your most important pages—and a few you definitely want blocked—you can be confident your robots.txt is guiding crawlers exactly as you intended.

Common Robots txt Mistakes That Hurt SEO

Just getting a robots.txt file live is a good start, but I’ve seen my fair share of simple mistakes that quietly sabotage a site's SEO. Having the file is one thing; making sure it’s strategically sound is another.

One of the most common blunders is trying to use robots.txt to hide sensitive pages or to de-index something that’s already been crawled. It’s critical to remember that robots.txt is not a security tool. Blocking a path like /private-documents/ only suggests crawlers stay away. It doesn't actually lock down the content, and if there's a link to that folder anywhere on the web, search engines might still find and index it without ever visiting the page.

Using The Wrong Tool for The Job

If you want a page removed from search results, robots.txt is absolutely the wrong tool to reach for. A Disallow directive will stop Google from crawling the page again, but it does nothing to remove a page that’s already in its index.

Even worse, blocking the page means Google can't see a noindex tag, which is the correct way to ask for a page to be removed from search results.

It’s a simple rule of thumb: use a

noindexmeta tag to get pages out of the search results. Userobots.txtstrictly to manage crawl traffic. Mixing these up is how you end up with pages stubbornly stuck in the index.

This distinction is massive. When you create a robots txt file, your main goal should be managing your crawl budget, not trying to control indexing or site security.

The High Cost of Accidental Blocking

A simple typo in your robots.txt can have a real financial impact, especially if you’re running ads. Imagine accidentally blocking a key landing page or your entire /blog/ directory. Every single pound you spend on ads driving people to those URLs is pretty much wasted if they can't find you again through a simple Google search.

With digital channels expected to account for around 82% of total UK ad spend in 2025, this isn't a trivial mistake. A misconfigured file blocking important landing pages or pricing info wastes a slice of every pound spent on getting new customers. For a startup paying for growth, even a small drop in organic visibility from bad crawl rules can mean thousands of pounds in lost pipeline. You can discover more insights about UK digital ad spend on WeAreSocial.com.

Targeting Specific Bots

Another missed opportunity is failing to use user-agent-specific rules. Sure, User-agent: * works as a catch-all, but what if you want to block a specific "bad bot" that’s scraping your content while giving Googlebot full access?

You can easily set up tailored rules for different crawlers.

User-agent: BadBotDisallow: /User-agent: GooglebotAllow: /

This kind of precision lets you manage crawler behaviour effectively, protecting your site from unwanted traffic without accidentally kneecapping your SEO. A well-managed robots.txt isn't just a technical file; it’s a powerful asset that protects your investment in both content and paid ads.

A Few Common Questions About Robots.txt and Webflow

Even with a good grasp of the basics, a few questions always pop up when dealing with robots.txt, especially when you're working inside a platform like Webflow. Let's get a few common points of confusion cleared up.

What Happens If I Don’t Have a Robots.txt File?

If your site is missing a robots.txt file, search crawlers just assume they have free rein to crawl every single page they can find. For a tiny website, this might not seem like a big deal, but it's far from ideal.

Without any guidance, crawlers will end up wasting their limited "crawl budget" on pages that don't matter, like admin login screens or backend utility pages. Putting a simple robots.txt in place gives you crucial control over how search engines see and understand your site.

Can I Use Robots.txt to Remove a Page From Google?

This is probably the single biggest misunderstanding about robots.txt. A Disallow rule only tells search engines not to crawl a page in the future. It absolutely does not remove a page that Google has already indexed.

Here's the catch: if you block an indexed page with

robots.txt, you're actually stopping Google from seeing thenoindextag, which is the correct way to get a page removed. To properly de-index a page, add a "noindex" meta tag to it, then ask Google to remove it through your Search Console account.

How Often Does Google Actually Check My Robots.txt File?

Google typically holds on to a cached version of your robots.txt file for up to 24 hours. This means any changes you push live from your Webflow project settings might take a full day to be picked up by Googlebot.

While you can try to speed this up using the Robots.txt Tester tool in Google Search Console, a little bit of patience is usually all you need for your new rules to start working.

Ready to build a high-performing Webflow site that turns clicks into customers? Derrick.dk specialises in conversion-focused development, SEO optimisation, and ongoing maintenance to help your business grow. Book a call today to diagnose issues and ship a website that truly works for you.

Webflow Developer, UK

I love to solve problems for start-ups & companies through great low-code webflow design & development. 🎉

.jpg)